Much of my work centers around creating computer software for visualizing various three-dimensional objects. These applications run on computers with two-dimensional displays; so there is a problem with conveying information in that third (depth) dimension. The human brain is hard-wired to convert two dimensional visual information into an internal three-dimensional representation of a scene. We can leverage this specialized hardware to convey a sense of depth using only a two dimensional screen.

You might assume that I believe stereo 3D to be the best way to convey depth information. But you'd be wrong. I am an evangelist for stereoscopic visualization. I love stereo 3D displays. But there are at least four other 3D visualization techniques that are more important than stereo. You must nail those four before you even think about stereo 3D. Below I have summarized my list of seven ways to enhance the perception of depth in 3D computer applications.

Without further ado; the list. In order of most important to least important:

Occlusion

Occlusion is the most important cue for communicating depth (Not "ambient occlusion", that's a kind of shading, technique #2). Occlusion means that you cannot see an object when it is behind another object. It's that simple. But displaying occlusion correctly is the most important part of conveying depth in computer graphics. In my caffeine video, atoms in the front occlude the atoms behind them. Fortunately, almost nobody gets this wrong; because everyone recognizes that it looks terrible when it is done wrong. OpenGL has always used a z-buffer to manage occlusion, so most 3D applications get occlusion right. Another approach, used by side-scrolling video games, is the "painters algorithm" (draw the stuff in back first) to give a sense of depth by occlusion.

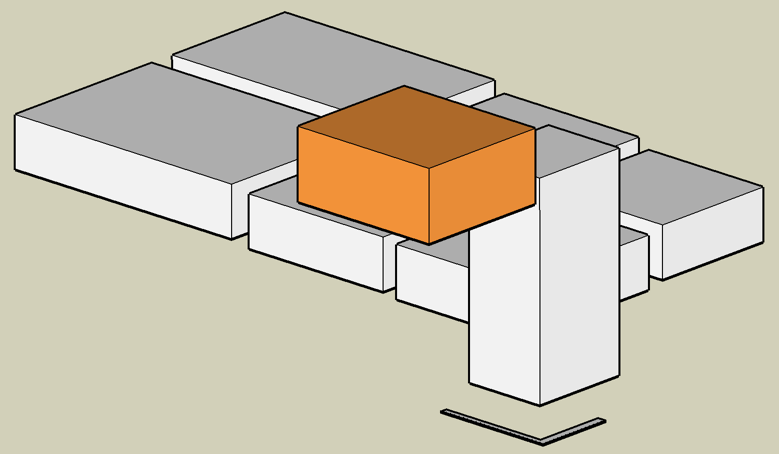

Occlusion tells me that the orange thing is in front of the other things.

One interesting display technique that does not respect occlusion, is volume rendering by maximum intensity projection. Although volume rendering is difficult to do well, the maximum intensity projection method yields a robust rendering of brightness and detail But the image looks the same whether viewed front-to-back or back-to-front. I know from experience that this can be confusing. But the advantages of the maximum intensity projection can sometimes make this tradeoff worthwhile.

Shading

By shading, I mean all of the realistic colors, gradations, shadows and highlights seen on objects in the real world. Shading is so important, that when some folks say "3D graphics", all they mean is fancy shading. This is one area that has been steadily evolving in computer graphics. My caffeine video uses a primitive (by modern standards) Lambertian shading model for the spheres, with a single point light source. The Lambertian model is sufficient to convey depth and roundness, but looks rather fake compared to state-of-the art rendering. Part of my excruciating jealousy of QuteMol comes from the clever shading techniques they have used. For this reason I plan to continue to improve the shading methods in my application.

Just look at the beautiful shading possible with QuteMol. I'm so jealous:

Perspective

Perspective, what artists call foreshortening, is the visual effect that objects close to you appear larger than objects far away from you. In my caffeine video, nearby atoms appear larger than distant atoms, especially when the molecule is close to the camera. This is one area where my worship of QuteMol breaks down. QuteMol appears to use orthographic projection, not perspective. Close atoms are rendered the same size as distant ones. But it took me a long time to notice, because QuteMol's beautiful shading is otherwise so effective at communicating depth.

Motion parallax

There are several ways that motion can reveal depth information by showing parallax, in which closer objects appear more displaced than more distant objects. When an object rotates or moves, parallax effects reveal important depth information. In my caffeine video, the rotations of the molecule help to convey the sense of depth.

Many 3D visualization applications use mouse dragging to rotate the scene. Users are constantly rotating the scene with the mouse while trying to examine the objects. These users crave motion parallax. In response, I have been experimenting with automated subtle wiggling of the scene so the user might not need to constantly drag the mouse. But I am not sure I have nailed the solution yet.

Another important source of parallax is when the viewer moves. This is the basis of head tracking in 3D graphics. Every time I give a stereoscopic 3D demo, the first thing the viewer does after putting on the 3D glasses is to move her head from side to side; because that is the natural response to wanting to maximize the perception of depth. But it doesn't work; because my applications do not do head tracking (yet). Motion parallax is more important than stereoscopic 3D.

The video below from 2007 is a famous example of the effectiveness of head tracking for conveying depth.

Stereoscopy

Your left and right eyes see slightly different views of a scene, and your brain can use these two images to perceive your distance from the objects you see. This is static parallax, as opposed to motion parallax (described in the previous section). Done properly, stereoscopic viewing can complete the sense of depth in a scene. But there are a lot of ways to get it wrong. That is why stereoscopic display must be approached with extreme care. My caffeine video uses YouTube's awesome stereoscopic support to display the molecule in stereo 3D. I like viewing it with my Nvidia 3D vision glasses (requires a special 120Hz monitor); though for some reason the aspect ratio is wrong in this mode. The other 3D modes seem to work fine though. Part of what I enjoy about stereo 3D is that there are so many details that must be done correctly; I like details.

Fog

When you see a mountain on the horizon far away, it appears much paler and bluer than it does close up. That distant mountain can be almost indistinguishable from the color of sky behind it. The more distant an object is, the closer its color becomes to the color of the sky. Even on a clear day, for extremely distant objects, like far off mountains, what I am calling "fog" has an important effect. On a foggy day, the same thing occurs on a vastly smaller scale. In either case, that change in color is an important cue about the distance of an object. In computer graphics, fog (or depth cueing) is easy to compute and has been used for ages, especially when other 3D effects were too hard to achieve. My molecule viewing application uses fog, but at a low setting, and might not be visible in my caffeine video. Fog is especially important as objects approach the rear clipping plane, to avoid "pop ups", the sudden appearance or disappearance of objects. It is more pleasing if the objects disappear by gradually receding into the fog.

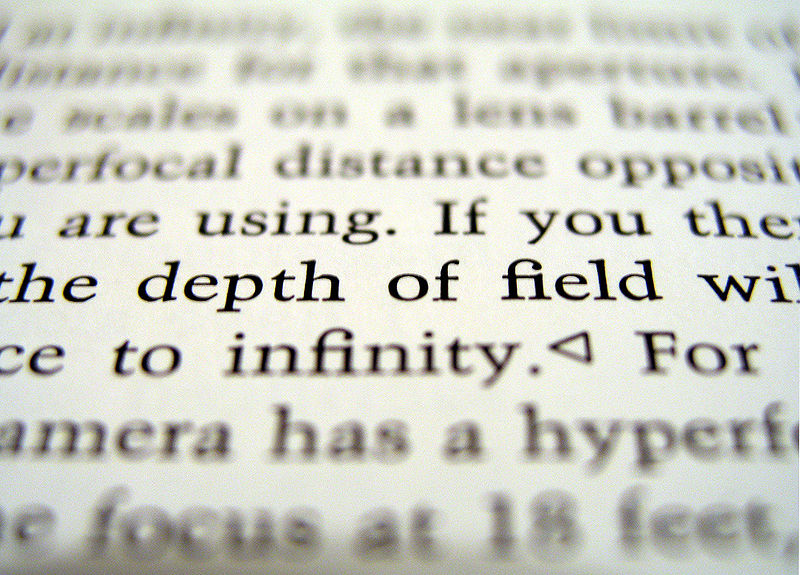

Depth of field

When you photograph a scene using a wide aperture lens, the focal point of your scene may be in sharp focus, but other objects that are either much closer to the camera or much farther away appear blurry. This blurriness is a cue that those other objects are not at the same distance as the focal point. This depth cue can also convey a false sense of scale in trick photography. An aerial city scene with an extremely narrow depth of field can appear to be just a tiny model of a city. Depth of field is not widely used in interactive computer graphics, because it is expensive to compute, it's a subtle effect, and to really do it properly, the focused part of the image should follow the user's gaze. Not just head tracking; but eye tracking would be required. Even the Hollywood movies make only light use of depth of field; in part because it is not possible to be certain where the audience's gaze is directed.

Most of the techniques I know of can be assigned to one of those seven categories. Have I missed any other depth conveying techniques? Comments are welcome below.

No comments:

Post a Comment