Back in 2016 I figured out how to draw an infinite plane, stretching to the horizon, in my VR headset. I did this because I wanted a very simple scene, with the ground at my feet, stretching out to infinity. This blog post describes some of the techniques I use to render infinite planes.

These techniques are done at the low-level OpenGL (or DirectX) layer. So you won't be doing this in Unity or Unreal or Blender or Maya. Unless you are developing or writing plugins for those systems.

The good news is that we can use the usual OpenGL things like model, view, and projection matrices to render infinite planes. Also we can use clipping, depth buffering, texturing, and antialiasing. But we first need to understand some maths beyond what is usually required for the conventional "render this pile of triangles" approach to 3D graphics rendering. The infinite plane is just one among several special graphics primitives I am interested in designing custom renderers for, including infinite lines, transparent volumes, and perfect spheres, cylinders, and other quadric surfaces.

Why not just draw a really big rectangle?

The first thing I tried was to just draw a very very large rectangle, made of two triangles. But the horizon did not look uniform. I suppose one could fake it better with a more complex set of triangles. But I knew it might be possible to do something clever to render a mathematically perfect infinite plane. A plane that really extends endlessly all the way to the horizon.

How do you draw the horizon?

The horizon of an infinite plane always appears as a mathematically perfect straight line in the perspective projections ordinarily used in 3D graphics and VR. This horizon line separates the universe into two equal halves. Therefore, our first simple rendering of an infinite plane just needs to find out where that line is, and draw it.

|

| The ocean surface is not really an infinite plane, of course. But it is similar enough to help motivate our expectations of what a truly infinite plane should look like. Notice that the horizon is a straight line. Image courtesy of https://en.wikipedia.org/wiki/File:Into_the_Horizon.jpg |

But before we figure out where that line is, we need to set up some mathematical background.

Implicit equation of a plane

A particular plane can be defined using four values A, B, C, D in the implicit equation for a plane:

Ax + By + Cz + D = 0 (1a)

All points (x y z) that satisfy this equation are on the plane. The first three plane values, (A B C), form a vector perpendicular to the plane. I usually scale these three (A B C) values to represent a unit vector with length 1.0. Then the final value, D, is the signed closest distance between the plane and the world origin at (0, 0, 0).

This implicit equation for the plane will be used in our custom shaders that we are about to write. It's only four numbers, so it's much more compact than even the "giant rectangle" version of the plane, which takes at least 12 numbers to represent.

When rendering the plane, we will often want to know exactly where the user's view direction intersects the plane. But there is a problem here. If the plane is truly infinite, sometimes that intersection point will consist of obscenely large coordinate values if the viewer is looking near the horizon. How do we avoid numerical problems in that case? Good news! The answer is already built into OpenGL!

Homogeneous coordinates to the rescue

In OpenGL we actually manipulate four dimensional vectors (x y z w) instead of three dimensional vectors (x y z). The w coordinate value is usually 1, and we usually ignore it if we can. It's there to make multiplication with the 4x4 matrices work correctly during projection and translation. In cases where the w is not 1, you can reconstruct the expected (x y z) values by dividing everything by w, to get the point (x/w, y/w, z/w, 1), which is equivalent to the point (x y z w).

But that homogeneous coordinate w is actually our savior here. We are going to pay close attention to w, and we are going to avoid the temptation to divide by w until it is absolutely necessary.

The general principle is this: if we see cases, like points near the plane horizon, where it seems like coordinate values (x y z) might blow up to infinity, let's instead try to keep the (x y z) values smaller and stable, while allowing the w value to approach zero. Allowing w to approach zero is mathematically equivalent to allowing (x y z) to approach infinity, but it does not cause the same numerical stability problems on real world computers - as long as you avoid dividing by w.

By the way, the homogeneous version of the plane equation is

Ax + By + Cz + Dw = 0 (1b)

Intersection between view ray and plane

The intersection between a line and a plane (in vector notation, those are cross products and dot products below) is

I = ((Pn⨯(V⨯L)) - Pd V)/(Pn·V) (2)

where I is the intersection point, Pn is the plane normal (A B C), V is the view direction, L is the camera/viewer location, and Pd is the D component of the plane equation.

Problems can occur when the denominator of this expression approaches zero. The intersection point needs to be expressed in the form (xyz)/w. Instead of setting w to 1, as usual, let's set w to the denominator Pn·V.

This means that the w (homogeneous) coordinate of the point on the plane intersecting a particular view ray will be the dot product of the view direction with the plane normal. The range of w values will range between -1 (when the view direction is directly opposite the plane normal) and +1 (when the view direction is exactly aligned with the plane normal). And the w value will be zero when the view is looking exactly at the horizon.

At this point, we can actually create our first simple rendering of an infinite plane. And we only need to consider the homogeneous w coordinate of the view/plane intersection point at first.

Version 1: Brown plane

Here is the first version of our plane renderer, which only uses the homogeneous w coordinate:

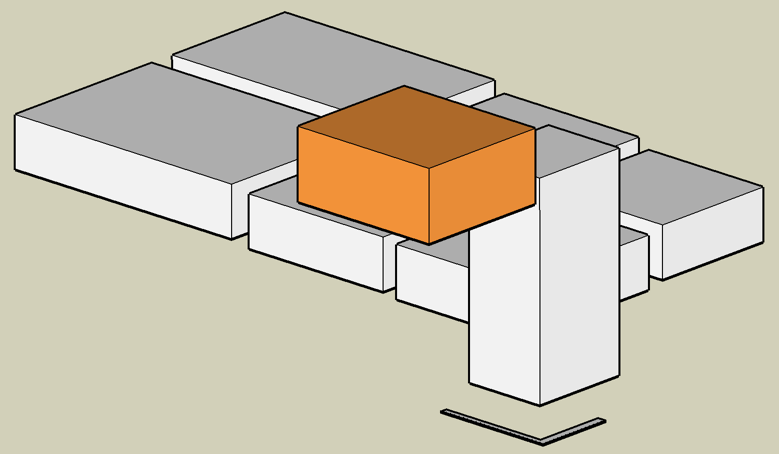

|

| Screenshot as I was viewing this infinite plane in VR |

The horizon looks a little higher than it does when you look out at the ocean. But that's physically correct. The earth is not flat.

This plane doesn't intersect other geometry correctly, the horizon shows jaggedy aliasing, there's no texture, and it probably does not scale optimally. We will fix those things later. But this is a good start.

Source code for the GLSL shaders I used here is at at

https://gist.github.com/cmbruns/815fc875afd8fe2755500907325b15f0

Continue to the next post in this series at

https://biospud.blogspot.com/2020/05/infinite-plane-rendering-2-texturing.html

Topics for future posts:

- Texturing the plane

- Using the depth buffer to intersect other geometry correctly

- Antialiasing

- Drawing the horizon line

- What happens if I look underneath that plane?

- More efficient imposter geometries for rendering